My core passion lies in the development and study of Computer Using Agents (CUAs), specifically focusing on MLLM-based Graphical User Interface (GUI) Agents. I aim to elevate these agents from mere task executors to sophisticated systems exhibiting human-like reasoning, generalization, and robust decision-making across complex digital environments.

My work spans the practical building and deployment of these agents and, crucially, the pursuit of multi-dimensional and reliable evaluation methodologies(Agent-as-a-Judge).

Currently a final-year Undergraduate student in Communication Engineering at Xidian University and Heriot-Watt University, I am actively seeking a PhD/Intern Opportunity.

- 2025.10: 🎉🎉 Our paper “You Don’t Know Until You Click: Automated GUI Testing for Production-Ready Software Evaluation” has been accepted by Scaling Environments for Agents (SEA) Workshop at NeurIPS 2025.

📝 Publications

You Don’t Know Until You Click: Automated GUI Testing for Production-Ready Software Evaluation

Yutong Bian*, Xianhao Lin, Yupeng Xie et al.

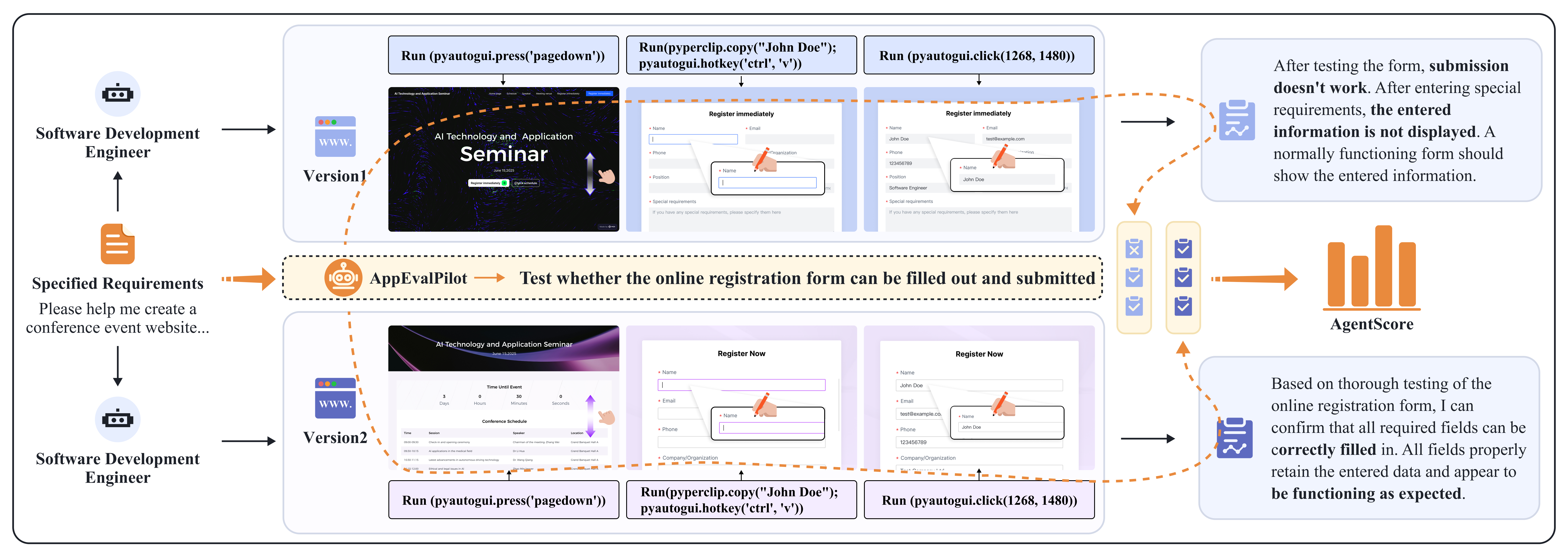

- As the lead for AppEvalPilot, I designed and implemented a system to dynamically assess software functionality through UI interaction, moving beyond the limitations of static analysis for LLM-based software engineers.

- My work involved creating automated test case generation and a test execution agent capable of complex GUI interactions.

- The experimental results demonstrated a high correlation (0.91) between AppEvalPilot’s assessments and those of human experts, while also being 55% faster and 94.8% cheaper.

📖 Educations

- 2021.09 - 2026.06, B.Eng. in Communication Engineering, Xidian University, China & Heriot-Watt University, UK.

- GPA: 3.8/4.0

💻 Internship

- 2024.09 - 2025.05, MetaGPT, Shenzhen, China.

-

Research Intern Supervisor: Sirui Hong, Haoming Tang, Chenglin Wu - Topic: GUI Agent; Agent Training; Benchmark

-

🚀 Projects

- OSAgent: Cross-platform Intelligent Assistant (Sep. 2024 - May. 2025)

- Focused on developing a universal, stable, and efficient GUI agent framework.

- Contributed to the architecture design, perception, planning, and execution modules.

- Achieved state-of-the-art performance on SpaBench (mobile) cross-application tasks (26.7% vs. 13.3% by the previous SOTA).

- R1-Like GUI Agent Training (Apr. 2024 - May. 2025)

- Focused on enhancing the core element grounding capability of GUI agents using GRPO.

- Designed a data collection and refinement pipeline and a multi-component reward function.

- Demonstrated that 1k meticulously selected data points can achieve performance comparable to SOTA models trained on millions of samples, significantly improving GUI grounding accuracy on benchmarks like ScreenSpot and ScreenSpotPro.